3D Detection

Overview

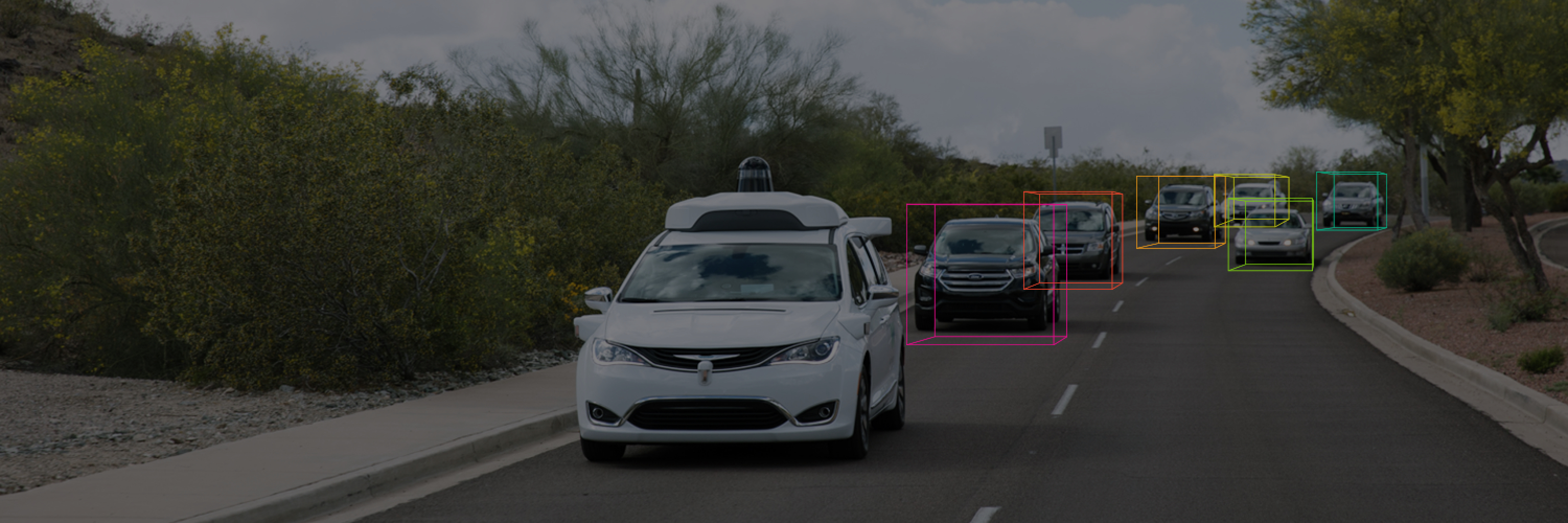

Given one or more lidar range images and the associated camera images, produce a set of 3D upright boxes for the objects in the scene. This is a simplified version of the 3D tracking challenge in that it ignores the temporal component. A baseline open source model is linked below for your reference.

Leaderboard

Submit

Instructions

To submit your entry to the leaderboard, upload your file in the format specified in the Submission protos. This challenge does not have any awards. You can only submit against the Test Set 3 times every 30 days. (Submissions that error out do not count against this total.)

Metrics

Leaderboard ranking for this challenge is by Mean Average Precision with Heading (mAPH) / L2 among "ALL_NS" (all Object Types except signs), that is, the mean over the APHs of Vehicles, Cyclists, and Pedestrians. All sensors are allowed to be used. And we enforce a causal system, i.e, for a frame at time step t, only sensor data up to time t can be used for its prediction.

Primary metric

Average Precision Weighted by Heading (APH): ∫h(r)dr where h(r) is the PR curve weighted by heading accuracy.(prediction [-π, π]) Compute predicted heading error (between 0 and π), divide by π to give weight from [0, 1].TP = min(|˜θ − θ|, 2π − |˜θ − θ|)/π, where ˜θ and θ are the predicted heading and the ground truth heading in radians within [−π, π]

FP = 1 per false positive presence of an object

FN = 1 per false negative

Secondary metric

Average Precision (AP): ∫p(r)dr where p(r)is the PR curve

IoU Overlap Threshold

Vehicle 0.7, Pedestrian 0.5, Cyclist 0.5

Sensor Names

L: All lidars

LT: Top lidar

C: All cameras

CLT: Camera and top lidar

CL: Camera and all lidars

I: Invalid

Preprocessing

Labeled boxes without any lidar points are not considered during evaluation.

Label Difficulty Breakdown

Each ground truth label is categorized into different difficulty levels (two levels for now):

LEVEL_1, if number of points > 5 and not marked as LEVEL_2 in the released data.

LEVEL_2, if number of points >= 1 and <= 5, or marked as LEVEL_2 in the released data. When evaluating, LEVEL_2 metrics are computed by considering both LEVEL_1 and LEVEL_2 ground truth.

Metric Breakdown

The following metric breakdowns are supported:

OBJECT_TYPE: Breakdown by object type ("ALL_NS" refers to all objects except signs: Vehicle, Cyclist, and Pedestrian)

RANGE: Breakdown by the distance between object center and vehicle frame origin. [0, 35m), [35m, 50m), [50m, +inf)

Baseline Model

You can find the baseline lingvo model on GitHub.