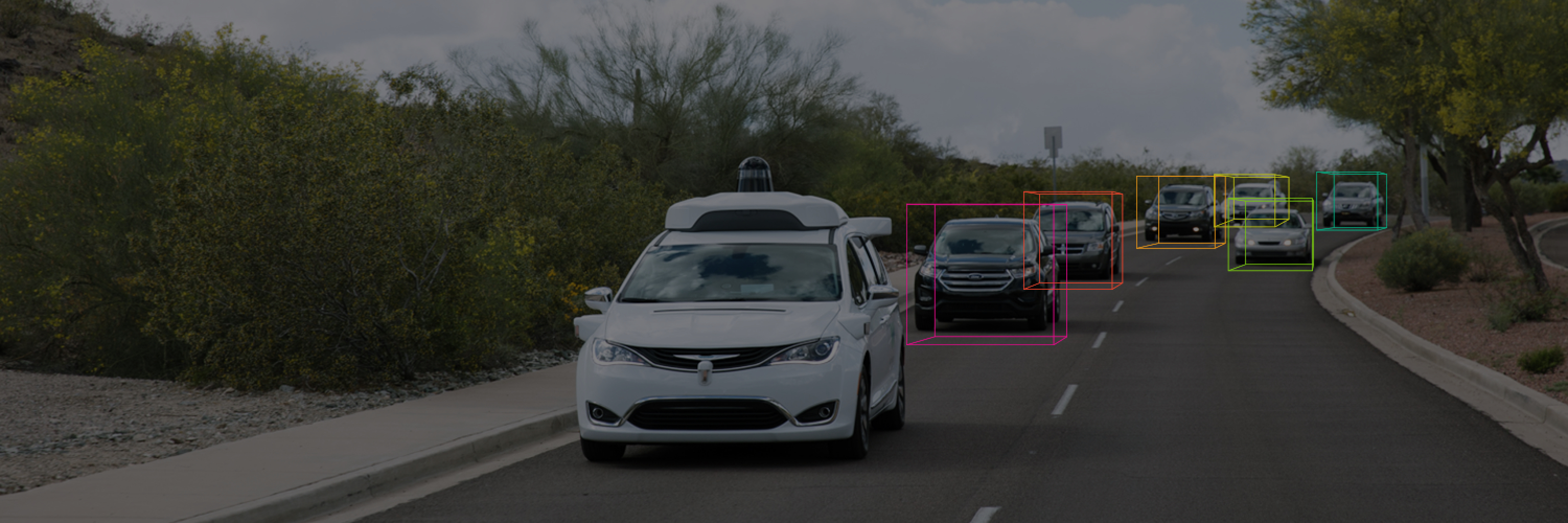

3D Camera-Only Detection

Overview

Given one or more images from multiple cameras, produce a set of 3D upright boxes for the visible objects in the scene. This is a variant of the original 3D Detection Challenge without the availability of LiDAR sensing. We use the current dataset as the training set and the validation set. We provide a new test set for this challenge that consists of camera data, but no LiDAR data. Following the original 3D Detection Challenge, the ground truth bounding boxes are limited to a maximum range of 75 meters.

The cameras mounted on our autonomous vehicles trigger sequentially in a clockwise order, where each camera rapidly scans the scene sideways using a rolling shutter. To help users reduce the effects of this rolling shutter timing, we include additional 3D bounding boxes in the dataset that are synchronized with the cameras. Specifically, for each object in the scene, we provide a bounding box for the time the center of the object is captured by the camera that best perceives the object. To this end, we solve an optimization problem that takes into account the rolling shutter, the motion of the autonomous vehicle and the motion of the object. For each object, we store the adjusted bounding box in the field camera_synced_box and indicate the corresponding camera in the field most_visible_camera_name. To reduce the effects of the rolling shutter camera setup on the users, our evaluation server compares the predicted bounding boxes with the ground truth bounding boxes that are synchronized with the rolling shutter cameras. We therefore advise users to train their models on the bounding boxes stored in the field camera_synced_box and then produce bounding boxes without taking the rolling shutter into further consideration. We believe that this is a user-friendly approximation of the rolling shutter. Furthermore, in our sensor setup, even when an object is detected in adjacent cameras, the detections will have similar shutter capture times and are likely to match with the same 3D ground truth bounding box. For completeness, we note that more complex alternative solutions that explicitly consider the rolling shutter camera geometry are also possible.

Some objects that are collectively visible to the LiDAR sensors may not be visible to the cameras. To help users filter such fully occluded objects, we provide a new field num_top_lidar_points_in_box, which is similar to the existing field num_lidar_points_in_box, but only accounts for points from our top LiDAR. Since the cameras are collocated with the top LiDAR, objects are likely occluded if no top LiDAR points are contained in their ground truth 3D bounding box. Our evaluation server uses the same heuristic to ignore fully occluded objects.

For training, users are allowed to utilize the available camera and LiDAR data. For inference, users rely on the camera data, but there is no LiDAR data available. Furthermore, when computing predictions for a frame, users are only allowed to make use of sensor data from that frame and all previous frames, but no sensor data from any subsequent frames. The leaderboard ranks submissions according to a new metric, LET-3D-APL. See our paper on the metric for more details.

Leaderboard

Disqualified from the 2022 Waymo Open Dataset Challenge

Submit

To submit your entry to the leaderboard, upload your file in the format specified in the Submission protos. You can only submit against the test set 3 times every 30 days. (Submissions that error out do not count against this total.)

Metrics

The popular detection metric 3D Average Precision (3D-AP) relies on the intersection over union (IoU) between predicted bounding boxes and ground truth bounding boxes. However, depth estimation from cameras has limited accuracy, which can cause otherwise reasonable predictions to be treated as false positives and false negatives. In this challenge, we therefore use variants of the popular 3D-AP metric that are designed to be more permissive with respect to depth estimation errors, which are the dominant cause of 3D-AP metrics on camera detections being significantly lower than those on LiDAR detections. Specifically, our novel longitudinal error tolerant metrics LET-3D-AP and LET-3D-APL allow longitudinal localization errors of the predicted bounding boxes up to a given tolerance. See our paper on the metric for more details. In this challenge, we use LET-3D-APL as the ranking metric.

We define the longitudinal localization error to be the object center localization error along the line of sight between the camera and the center of the ground truth bounding box. The maximum longitudinal error that our system tolerates for a prediction to be associated with a ground truth object is 10% of the distance between the camera and the center of the ground truth object. We also define the localization affinity of a prediction with respect to a ground truth object as follows:

The localization affinity is 1.0 when there is no longitudinal localization error.

The localization affinity is 0.0 when the longitudinal localization error is equal to or exceeds the maximum longitudinal localization error.

We linearly interpolate the localization affinity in between.

We consider all pairs of predicted bounding boxes and ground truth objects. For each pair, we correct the longitudinal localization error by shifting the predicted bounding box along the line of sight between the camera and the center of the predicted bounding box. This minimizes the distance between the centers of the predicted bounding box and the ground truth object. We then use the resulting corrected bounding box to compute the IoU, which we refer to as the longitudinal error tolerant IoU (LET-IoU). We consider pairs as valid if they yield a nonzero localization affinity and a LET-IoU that reaches the pre-specified IoU thresholds, which for vehicles, pedestrians and cyclists are 0.5, 0.3 and 0.3, respectively.

For all valid pairs, we compute the optimal assignment of predictions to ground truth objects via bipartite matching, where the weights are given by the LET-IoU scaled by the localization affinity. Predictions that have been associated with a ground truth object represent true positive (TP) predictions. Predictions that have not been associated with any ground truth object represent false positive (FP) predictions. Ground truth objects that have not been associated with any prediction represent false negatives (FN). This allows us to compute a PR curve.

Finally, we define two longitudinal error tolerant metrics, LET-3D-AP and LET-3D-APL. First, the metric LET-3D-AP is the average precision based on the counts of TPs, FPs, and FNs. Note that this metric does not penalize any corrected longitudinal localization errors. This metric is therefore comparable to the popular metric 3D-AP, but with more tolerant matching criteria. In contrast to this, the metric LET-3D-APL penalizes longitudinal localization errors by scaling the precision using the localization affinity. In this challenge, we will only use the LET-3D-APL metric to rank the submissions on the leaderboard.

Rules Regarding Awards

See the Official Challenge Rules here.