Sim Agents

Overview

Given agents' tracks for the past 1 second on a corresponding map, simulate 32 realistic joint futures for all the agents in the scene.

Testing in simulation has become a key ingredient for ADV development, making it more scalable and robust. A naive strategy for simulation would rely upon playing back the sensor data the ADV experienced in the real world, making small changes to the software and seeing how the scenario would have played out. This strategy has inherent problems, such as the inability of playback objects to respond to ADV behavior changes, and therefore we need simulation agents (“sim agents”) that realistically react to our actions.

The Sim Agents Challenge treats simulation as a distribution matching problem: There exists a distribution of driving scenarios in the real world, and we would like to come up with a stochastic simulator defined over the same domain. We say the simulator is “realistic” when these two distributions are the same. Although real world scenarios are stochastic (i.e., agents may do different things from the same initial conditions), we only ever record one future for each history. Fortunately, we can sample sim agent behaviors from the simulator an arbitrary number of times under the same initial conditions. To quantify the mismatch between simulated and logged agents, we therefore measure the likelihood of the real scenarios under the density estimated by sampling the sim agents. As the agents become more realistic, the distribution over their behavior should assign high likelihood to the logged samples.

We employ a collection of behavior-characterizing metrics that measure likelihoods over: motion, agent interactions, road / map adherence. These are described in more detail in the Evaluation section below. To compute these metrics, we require a collection of 32 different futures, with scene-consistent interactive agents behavior, for each initial scenario (providing 1 second of history).

The interface we use for the Sim Agents Challenge is similar to the existing Motion Prediction challenges. Sim agent trajectories (i.e., sequences of bounding boxes in x/y/z) should be sampled from a simulator or simulation model in an autoregressive fashion and use the same training, validation, and testing datasets used for the Motion Prediction Challenge.

The use of Lidar data in Motion Dataset v1.2 is optional.

Leaderboard

This is the V1 leaderboard, where we have improved the accuracy of the collision and off-road computation over the previous V0 Sim Agents Challenge leaderboard.

Submit

Submissions for this version of the challenge are closed. You can submit to the 2025 version of the Sim Agents challenge.

How to simulate

While this challenge requires the simulation of agent behaviors, we are not restricting entrants to a specific simulator. Entrants may leverage existing simulator code or infrastructure. We only require entrants to upload simulated trajectories, but we do introduce a couple of requirements for making valid submissions. Please refer to the notebook tutorial for a detailed and practical description of these requirements.

For a simulation to be valid, entrants need to simulate the behavior of all the valid objects: these correspond to all those tracks from the Scenario proto which are valid at the last step of history (for the test set data). We simulate car, pedestrian, and cyclist world agents.

We also require entrants to simulate the ADV. Since the goal of the challenge is to produce realistic traffic scenarios, the ADV agent will be judged by the same imitation metrics as the world agents.

Since users do not have access to the test set “future” data, we also make the following assumptions:

Object bounding boxes estimated dimensions stay fixed as per the last step of history (while they do change in the original data).

Objects coming into the scene after the first simulation timestep are not currently considered part of the simulation.

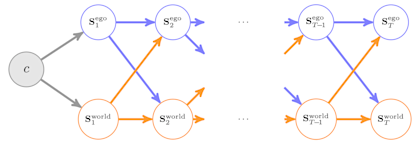

In contrast with many existing behavior prediction challenges, we require that entrants adhere to the following factorization of world agents and the ADV agent, where c is the set of input information about a scene (e.g., the static map and past positions of some agents), \(s_{ t}^{\text{world}}\) refers to the combined positions of every agent except for the ADV at time t, and \(s_{ t}^{\text{ADV}}\) refers to the ADV’s position at time t:

\begin{equation}

p(S_{1:T}^{\text{ADV}}, S_{1:T}^{\text{world}} | c) = \prod_{t=1}^T \pi_{\text{ADV}}(S_t^{\text{ADV}} | s_{< t}^{\text{ADV}}, s_{< t}^{\text{world}}, c)\cdot p(S_t^\text{world} | s_{< t}^{\text{ADV}}, s_{< t}^{\text{world}}, c)

\end{equation}

This factorization corresponds to the following graphical model, in which each directed edge carries forward all ancestors of the source node as inputs to the target node.

Entrants may further factorize the world model \(p(S_t^\text{world} | s_{< t}^{\text{ADV}}, s_{< t}^{\text{world}}, c)\) if desired, but this is not required.

In other words, eligible submissions should be produced by models factorized into two autoregressive components: World and ADV. These models should be conditionally independent from one another given the state of all the objects in the scene. We require this conditional independence assumption to ensure that the world model could, in principle, be used with new “releases” of the ADV model.

However, we do not enforce any motion model (also because we have multiple agent types), which means entrants must directly report x/y/z coordinates and heading of the objects’ boxes (which could be generated directly or through an appropriate motion model).

Evaluation

We evaluate sim agents based on how well they imitate logged data. In theory, the perfect sim agent is a probabilistic model that could match the distribution of logged futures from the same history. In practice, we have only a single logged future for each logged history, making comparisons between these two distributions intractable.

We therefore score submissions by estimating densities from history-conditioned sim agent rollouts, then computing the negative log likelihood of the logged future under this density. The futures are represented with behavior-characterizing measures from three categories: motion, agent interactions, road / map adherence, explained below.

Agent motion

Since we are not enforcing any motion model during simulation, we want to make sure the simulated motion of agents is consistent with what we see in the data. To capture motion features, we compute:

Linear speed: magnitude of the delta in (x,y,z) at each step.

Angular speed: signed delta in yaw between each step.

Linear acceleration: signed delta of speed magnitudes above, for each step.

Angular acceleration: signed delta in angular speeds.

Agent interactions

These features try to capture the interaction of agents with each other. It includes:

Collision indication: a boolean identifying if the object collided with something else.

Distance to nearest object: minimum distance of any other agent to the ADV. If negative, the value corresponds to a collision.

Time to collision: time (in seconds) before the agent collides with another agent, assuming constant speed.

Map adherence

These features try to capture the behavior of agents on the map, irrespective of each other. Includes:

Offroad indication: a boolean identifying if an object is off the driveable part of the map.

Distance to road edge: minimum distance to the edge of the driveable part of the map. This is negative inside the road and positive elsewhere.

Distributional setting and aggregation

We want to compare how likely logged data is when scored against the simulated distribution of behavior. To do this, we approximate (at each step for each object), the 32 samples from simulation using an histogram (or a Kernel density) approximation, and evaluate the likelihood of the logged sample.

This gives us, for each scenario, a likelihood score for each of the simulated features. We aggregate the different features using a weighted mean (weights are specified in the configuration file) of all the features, which we call the meta-metric. Submissions will be scored by such a metric, where a higher score means a better submission.

Rules Regarding Awards

Please see the Waymo Open Dataset Challenges Official Rules.