Imitation Is Not Enough: Robustifying Imitation with Reinforcement Learning for Challenging Driving Scenarios

Authors

Abstract

Imitation learning (IL) is a simple and powerful way to use high-quality human driving data, which can be collected at scale, to produce human-like behavior. However, policies based on imitation learning alone often fail to sufficiently account for safety and reliability concerns. This paper presents a method that combines imitation learning with reinforcement learning using simple rewards to substantially improve the safety and reliability of driving policies over those learned from imitation alone. In particular, we train a policy on over 100k miles of urban driving data, and measure its effectiveness in test scenarios grouped by different levels of collision likelihood. Our analysis shows that while imitation can perform well in low-difficulty scenarios that are well-covered by the demonstration data, our proposed approach significantly improves robustness on the most challenging scenarios (over 38% reduction in failures). To our knowledge, this is the first application of a combined imitation and reinforcement learning approach in autonomous driving that utilizes large amounts of real-world human driving data.

Video

Overview

We conduct the first large-scale application of a combined imitation and reinforcement learning (RL) approach in autonomous driving utilizing large amounts of real-world urban human driving data (over 100k miles). RL and imitation learning offer complementary strengths. RL is able to use reward signals to optimize objectives directly, but reward design is a difficult problem. On the other hand, imitation learning avoids the need for a hand-designed reward, but it lacks explicit knowledge of what constitutes good driving, such as collision avoidance, and suffers from covariate shift. By combining both approaches, we develop an agent that only requires specifying a simple reward function and yet is able to perform well in rare and challenging driving scenarios.

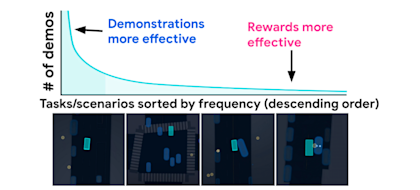

The demonstration-reward trade-off. As the amount of data for a particular scenario decreases, reward signals become more important for learning.

We systematically evaluate its performance and baseline performance by slicing the dataset by difficulty, demonstrating that combining IL and RL improves safety and reliability of policies over those learned from imitation alone (over 38% reduction in safety events on the most difficult bucket).

Method

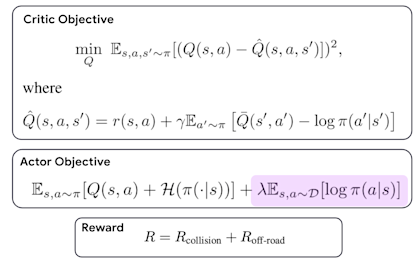

We propose BC-SAC as an algorithm for learning autonomous driving agents, which combines Soft Actor-Critic (SAC) with a behavior cloning (BC) learning term added to the actor objective. The critic update remains the same as in SAC. We use a simple reward function that linearly combines collision and off-road rewards.

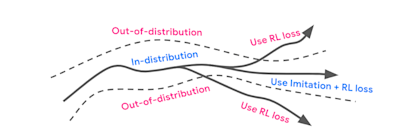

The advantage of BC-SAC can be intuitively visualized in the figure above. For in-distribution states, both imitation and RL terms contribute to learning. However, in out-of-distribution states where no demonstration data is available, the agent can fall back to learning from reward signals.

Result Highlights

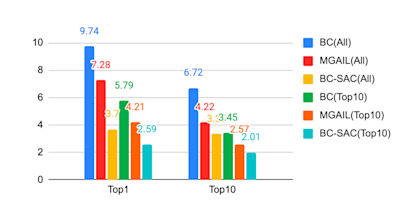

We find that BC-SAC is able to outperform competing benchmarks (open and closed-loop imitation learning): BC and MGAIL [1], especially on the most challenging scenarios.

In this figure we plot failure rates on the most challenging evaluation sets: Top1 and Top10 (lower is better, with training on All and Top10). BC-SAC consistently achieves the lowest error rates.

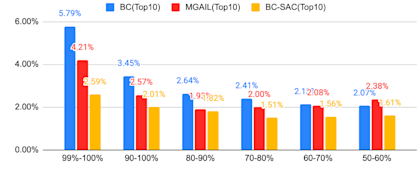

In this next figure, we plot failure rates of BC, MGAIL, and BC-SAC across scenarios of varying difficulty levels (50% - 100%, lower is better). While all methods perform worse as the evaluation dataset becomes more challenging. BC-SAC always performs best and shows the least degradation.

[1] Bronstein, Eli, et al. "Hierarchical Model-Based Imitation Learning for Planning in Autonomous Driving." 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022.

Visualizations

-

Top1 Case - Left: MGAIL, Right: BC-SAC. MGAIL does not provide sufficient clearance with a car pulling out, and has a close call. BC-SAC provides sufficient clearance.

-

Top1 Case - Left: MGAIL, Right: BC-SAC. MGAIL proceeds on a narrow road with parked vehicles on both sides, resulting in a collision. BC-SAC conservatively slows down.

-

Top1 Case - Left: MGAIL, Right: BC-SAC. MGAIL has an overlap with a curb because of the large vehicle on the adjacent lane. BC-SAC accelerates to avoid being nudged into the curb.

-

Top1 Case - Left: MGAIL, Right: BC-SAC. The MGAIL agent drives too close to the double parked car with a pedestrian exiting a vehicle causing a collision; BC-SAC agent provides sufficient clearance and avoids the collision.

-

Top10 Case - Left: MGAIL, Right: BC-SAC. A parked car occupies part of the ego vehicle’s lane, the MGAIL agent keeps the current lane and collides with the parked car; BC-SAC agent is able to change lanes early and navigate around the parked car.

-

Top10 Case - Left: MGAIL, Right: BC-SAC. MGAIL is sandwiched between two vehicles. BC-SAC changes lanes early and avoids the collision.

-

Top10 Case - Left: MGAIL, Right: BC-SAC. MGAIL proceeds straight and has a collision with the large vehicle. BC-SAC successfully navigates around the large vehicle without a collision.

-

Top10 Case - Left: MGAIL, Right: BC-SAC. MGAIL does not provide sufficient clearance and has a close-call with the large vehicle, while BC-SAC leaves an appropriately wide-clearance that avoids the incoming vehicle.

-

Top10 Case - Left: SAC, Right: BC-SAC. Without explicitly rewarding progress, SAC tends to slow down to avoid safety events. In this example the SAC agent slows down in the intersection causing a rear end collision; With the behavior cloning loss, our approach was able to drive with an appropriate speed profile through the intersection without a collision.

-

Top50 Case - Left: MGAIL, Right: BC-SAC. MGAIL has overlaps with road edges. BC-SAC proceeds normally without off-road events.